Between Revenue Growth And Cost Explosion: Defining A Scalable Pricing Model For Your (Agentic) AI Product

Why Monetization Models Need to Consider Multiple Factors Early to Avoid Headaches Later

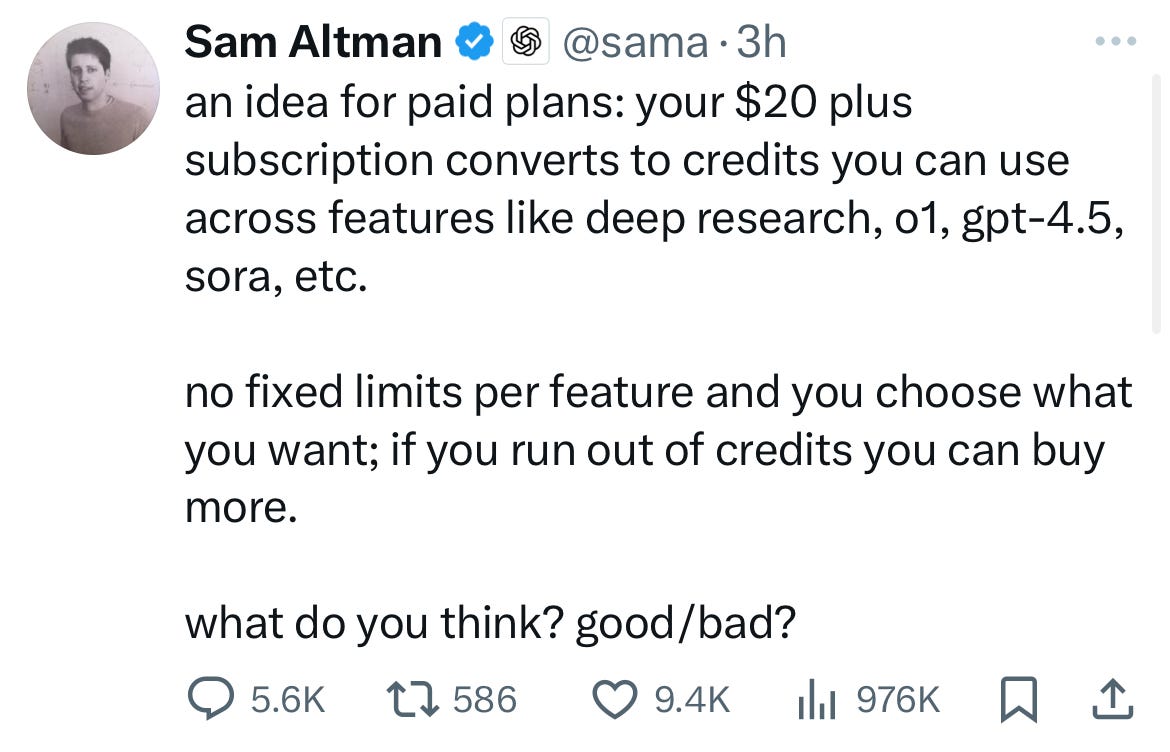

Sam Altman’s tweet last week made me pause. OpenAI made history: Within two months of ChatGPT’s release, it had 200 million active users—the fastest-growing application in history. That was two years ago. Now, it has 400 million active weekly users! Changing the pricing model of ChatGPT highlights a common problem in Generative AI pricing: unpredictable, rising costs eating away at your margin. But this problem will only be exacerbated with AI agents that collaborate with other agents over multiple iterations or when output needs to be regenerated.

If it happens to ChatGPT, chances are it will happen to your (Agentic) AI products, too. But it’s preventable. Let’s break it down…

Increased Cost and Usage Eating Away at OpenAI’s ChatGPT Margin

Early on, OpenAI set the price for ChatGPT Plus at $20 per user per month. It was likely based on the token cost for GPT-3.0 at the time, the expected average consumption per user (cost to OpenAI), and consumers’ willingness to pay.

In the U.S. and other developed countries, $20 per user per month is a reasonable price point for a new and novel tool like ChatGPT. Users are willing to pay that amount in exchange for productivity gains.

To limit its financial risk from users consuming significantly more tokens than $20 per month (aka overconsumption) and to accommodate a surge in new users, OpenAI set up rate limits: 50 prompts within a 3-hour window. Soon, OpenAI’s competitors introduced their assistants at the same price point, for example, Google Bard/ Gemini and Anthropic Claude. (OpenAI eventually dropped rate limits on the subscription plan.)

Over the past two years, OpenAI has added new large language models to ChatGPT (GPT-3.5 and GPT-4.0), multi-modal models that can handle images and audio (GPT-4o and GPT-4.5), and reasoning models (o1 and o3). The latter has introduced the consumption of reasoning tokens in addition to the standard prompt and longer processing/ self-reflection times to generate output. Add on top of that new features like custom GPTs for repeatable prompts and tasks, Sora for video generation, Web Search, and Deep Research.

All of these features are highly computing-intensive and create higher, especially highly variable costs based on a user’s use of the tool. Meanwhile, the price ($20 per user per month) has remained constant. Whatever margin or calculated loss for customer acquisition OpenAI had factored in would have suffered over time.

With 400 million weekly active users, user adoption has clearly skyrocketed, and the problem has ballooned as well. If you are OpenAI, competition from AI labs such as Anthropic, Cohere, and Mistral, as well as Google, Microsoft, and others, forces you to quickly match or outpace your competitors’ features to avoid falling behind.

Add the recent cost pressure since the release of the Chinese DeepSeek assistant and reasoning model R1 at a fraction of your token cost, and you end up getting pressure from multiple angles.

It illustrates a fundamental challenge in pricing Generative AI applications which will extend into Agentic AI as well: avoiding cost overruns due to consumption above forecast.

So, what can you do if you are a Chief Product Officer or Head of Go-to-Market of a Generative AI-enabled or Agentic AI product?

JOIN THE NEXT COHORT: Monetizing Agentic AI: Foundations

Agentic AI is the hottest topic of 2025. The right commercial model makes the difference between making cash or burning through it! Define the commercial model for AI agent-based solutions that will create new revenue streams and enthusiastic customers, based on 20+ years of senior leadership experience in enterprise software.

Adjusting Pricing Variables to Mitigate Cost Overruns

Pricing comes down to the fundamentals of economics and the concept of price elasticity of demand. AI assistants such as ChatGPT can be easily substituted. They are an example of products that have a relatively elastic demand. Any price increase will lead to a decline in demand for this product.

Despite Generative AI getting traction just over the past 10 quarters, it is a market characterized by substitutable products of similar quality and capabilities coupled with low switching costs. Generative AI is a basic technology.

Vendors like OpenAI have several variables they can adjust in their pricing model:

Increase the price: Keep the current “all you can eat“ feature scope and raise the price to $50 per user per month to account for the increased demand vs. the higher costs.

Introduce pricing tiers: Add differentiation for various user types ranging from occasional to power users, e.g., $20, $50, $100, and $200 per user per month, coupled with usage limits such as 2,000, 5,000, 10,000, and 20,000 prompts.

Maintain the price: Do not change the price of $20 per user per month, and rather change what users get for this amount.

Reduce feature set: For example, reduce the basic scope of ChatGPT to just chat-based interaction via GPT-4o/GPT-4.5—no DALL-E, Sora, image- or audio-processing capabilities.

Limit transaction volume (via credits): As Sam Altman suggests, use a credit-based approach in which users receive a quota of credits at the beginning of the month which can be used for a variety of capabilities (e.g., text, image, audio, and video). Generating output consumes a pre-defined number of credits until the user’s credits have been depleted. This model offers some flexibility for users in their use of different capabilities, and it protects the vendor from cost overruns as the Dollar-to-Credits conversion would be based on the most expensive service. This might work for B2B customers who actually want choice. But it is more complex for consumers as vendors will likely need to introduce a conversion factor based on the underlying resource cost.

Illustrative example:

GPT-4o-mini: 0.3 credits per input token, 0.5 credits per output token

GPT-4o: 0.7 credits per input token, 1 credit per output token

GPT-4.5: 1.2 credits per input token, 1.5 credits per output token

DALL-E 3: 1.5 credits per input token, 20 credits per generated image

Sora: 3 credits per input token, 25 credits per second of generated video

o1: 5 credits per input token, 7 credits per output token

o3: 8 credits per input token, 11 credits per output token

Deep Research: 22 credits per input token, 30 credits per output token

Customers will struggle to understand and estimate how many credits they need or how far a set number of credits will get them.

Add on top of that the question of what happens when customers run out of credits:

Hard limit: Once a user has used up their current month’s credits, they need to wait until the next billing cycle to receive new credits (e.g., image generation in Canva).

Throttling: Once a user has exceeded a pre-defined number of priority-processing credits (or minutes), generating new output is throttled based on off-cycle resource availability and lower resource requirements (e.g., video generation in HeyGen).

Consumption-based: Users buy a number of credits for a fixed price; generating output consumes credits from this quota (e.g., API calls for developers such as Stability AI or OpenAI).

Expiration: Any remaining, unused credits expire at the end of the billing period, aka “use it or lose it”) (e.g., ElevenLabs, Microsoft, or SAP). For B2C products, the typical period is monthly, whereas in B2B, this period is annually or over 3-to-5-year contract period. (The latter creates liabilities and potential revenue recognition issues.)

Adjusting any of these variables can help mitigate the impact of increased cost and usage on your product’s margin. But software vendors are not the first to change their dominant pricing model.

Historic Pricing Model Changes Across Industries and Learnings for (Agentic) AI

OpenAI’s CEO is contemplating a change of their pricing model. This highlights a significant shift in the software industry and the factors that pricing models need to consider for any vendor to build and scale a profitable business.

Again, it is largely caused by vendors setting a fixed price while the underlying costs are highly variable, and the volume of these transactions exceeds the initial forecast upon which the current pricing model is based.

But this type of pricing isn’t unique to the software industry or OpenAI. Most of us use services that have similar pricing models:

Utilities are accurately metered. You consume a kWh of electricity, and you pay a predefined rate. Your cost at the end of the month is a function of the unit cost multiplied by your consumption. Your utility bill varies between different times of the year, whether you are at home or on vacation. We accept it as a given that the price directly depends on our consumption.

Mobile phone billing has moved from a contract fee plus a number of minutes and text messages to an all-inclusive model with limits (e.g., 5GB high-speed data). After you hit the rate limit, your access might be throttled to a lower speed (256kbps instead of 5G); you can buy additional capacity or pay based on consumption.

Internet access has moved from a per minute to a per MB down-/upload (25 years ago) towards flat-rate pricing (20 years ago) and an upper limit of hundreds of GB per month (present). You pay a monthly flat fee that is independent of your actual usage. If you don’t exhaust the maximum download volume (e.g. 250 GB/ month), you are leaving money on the table.

Car leasing allows you to drive a car up to a quota of miles for the duration of your leasing contract. The cost of maintenance, inspection, etc. is priced into your monthly rate. Similar to your Internet access, if you drive your car less than expected and stay below the mileage limit, you leave money on the table. If you exceed the number of miles included in your contract, you need to pay extra at the end of your contract term.

So why is changing software pricing so hard?

In the four cases above, the pricing model has reduced complexity, provided more flexibility, and seen the effect of degrading costs. Paying a flat fee for access to a car without the depreciation and variance in maintenance creates predictable cost and convenience. Higher internet speed and download volumes when you need them do the same. Accurate, metered billing conserves natural resources and puts responsibility in the users’ hands.

In OpenAI’s ChatGPT situation, users already have this convenience today: flat-fee pricing for variable usage. Dialing it back to consumption-based pricing (or introducing limits) will likely create friction which could eventually lead to customer churn. OpenAI might be able to compensate it through the higher price point or those paying extra for overages—or maybe, OpenAI even wants to drive out overconsumers to improve their margin.

In any case, users won’t be happy if the change is a perceived step down from the status quo.

Summary

OpenAI has pioneered the $20 “all-you-can-eat” price point for AI assistants while skyrocketing to 400 million weekly users. But Sam Altman contemplating a change of the pricing model highlights the growing challenge in monetizing AI applications: a fixed price despite highly variable underlying costs.

This problem is only going to be exacerbated as more and more vendors bring their AI agents to market. However, vendors who get into this market early can significantly disrupt incumbents and the status quo of monetization models.

Understanding the various levers vendors have to adjust the price or the feature set of the (Agentic) AI application is a prerequisite for defining the optimal model—from both a vendor’ and a customer’s point of view. Simplifying pricing to increase convenience or flexibility will likely be perceived much better by customers than introducing friction to an established model.

Want to learn how you can set up your company’s Agentic AI pricing model? Register to join a live cohort and start monetizing your Agentic AI products!

Explore related articles

Become an AI Leader

Join my bi-weekly live stream and podcast for leaders and hands-on practitioners. Each episode features a different guest who shares their AI journey and actionable insights. Learn from your peers how you can lead artificial intelligence, agentic AI, generative AI & automation in business with confidence.

Join us live

March 11 - Dan Sodergren (Future of Work Expert) will join and share how AI agents are reshaping our work forever.

March 18 - Tomas Gogar (CEO of Rossum) will share the three steps towards enterprise-grade Agentic AI.

March 25 (members-only) - Camila Manera (Chief AI Officer) will share how to bridge the gap between AI and the business.

April 01 (members-only) - Peter Gostev (Head of AI at Moonpig) will talk about how not to get fooled by Agentic AI claims.

Watch the latest episodes or listen to the podcast

Follow me on LinkedIn for daily posts about how you can lead AI in business with confidence. Activate notifications (🔔) and never miss an update.

Together, let’s turn hype into outcome. 👍🏻

—Andreas