AI Labs Are Training Their Models On Your Papers, Books, And Posts—So What?

Between Outrage and Indifference Toward Big Tech's Practices—and What Data & AI Leaders Can Adopt

Have you ever felt indifferent about an issue to the point where you have questioned whether this indifference is actually an adequate reaction given the circumstances? Lately, I’ve been finding myself go through exactly these motions.

A few days ago, The Atlantic1 published an article about Meta using millions of books, articles, and research papers to train their AI models as part of the so-called “LibGen” dataset. You can check if any of your work is included and Meta’s Llama models have likely been trained on. I entered my name—and here is what I found…

Checking If Your Work Has Been Used

Indeed, the search returned a 7-year-old research paper that I co-authored when the machine learning hype was starting to build: Machine Learning: Introduction and Use Cases in Customer Retention Management. At the time, we published it as Open Access to make the findings accessible to anyone. In academia, it is an established practice to cite the sources. According to Google Scholar, it has been cited 47 times.

When I saw that Meta has apparently used this paper among millions of others, my initial reaction was: “So what?”

The following thoughts kept running through my mind:

It was written in 2018. (→ a lot has changed since then)

It was published for anyone to read. (→ …and cite!)

Was it used for content (information) or for structure (German version)?

Should I feel some sense of pride? (→ but it’s not like someone handpicked it)

What about other authors who have had hundreds of their papers and books ingested?

What if my book had been part of the dataset? (→ how would it change my perspective)

Does Llama reuse parts of the content word-for-word or as broad ideas? How does it differ from anyone who reads the same information?

Is it legal for tech companies to use that kind of data? (→ what about remuneration and royalties—maybe not in our case, but for others)

Well, Meta isn’t the only one doing it. OpenAI is facing several lawsuits (→ New York Times vs. OpenAI). Thomson Reuters recently won a lawsuit against ROSS Intelligence for plagiarizing its content.

Is the argument brought forward in the Atlantic article (engineers determined it would “take too long”) valid? Does it justify using that data without asking for consent or permission?

ONLINE COURSE — Agentic AI: Challenges and Opportunities for Leadership

Prepare for the challenges and opportunities of agentic AI as you lead AI transformation in your organization. Maximize the benefits of these technologies while minimizing disruption.

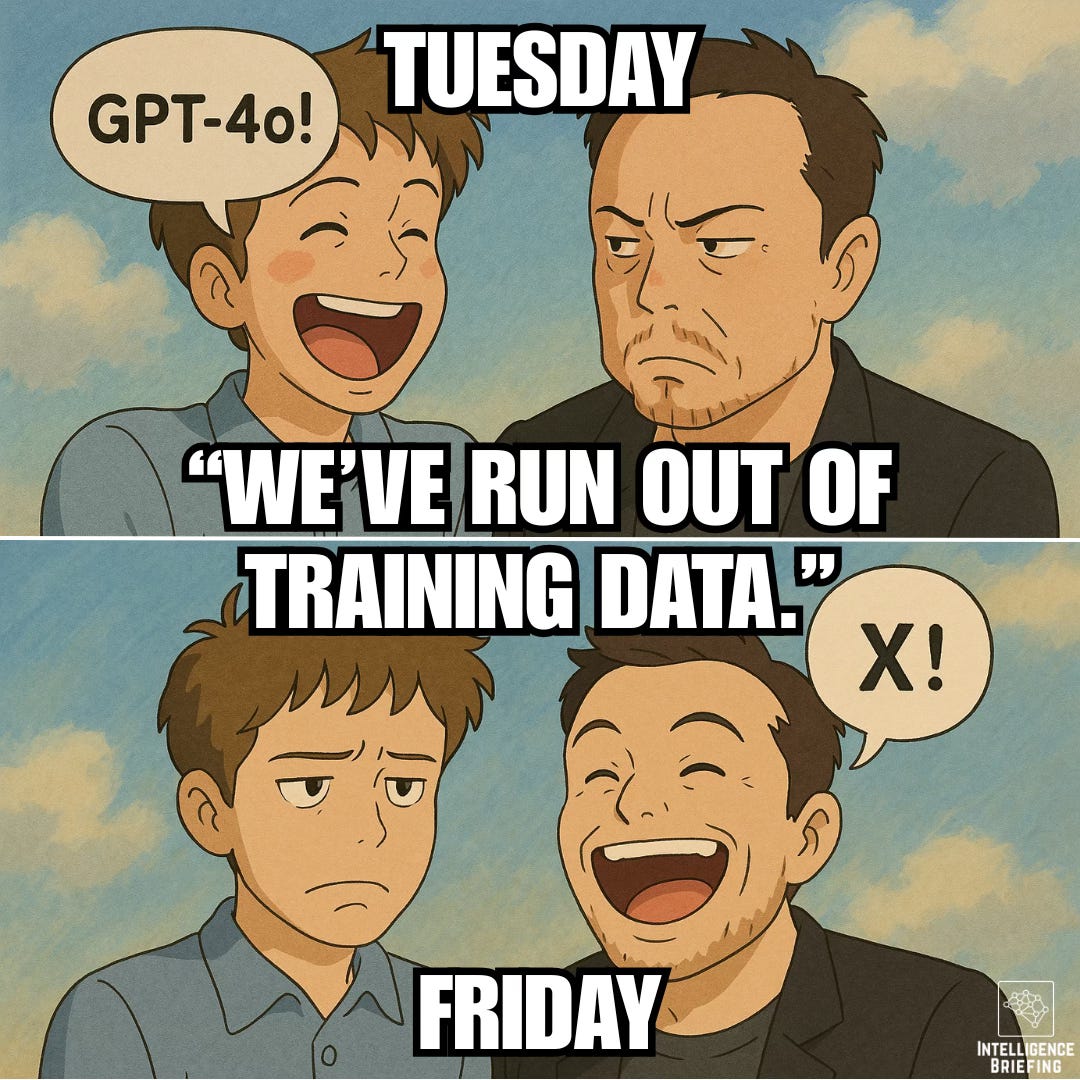

Between “Fair Use” and Running Out Of Public Data

This is not the first time that an AI company has been in the news for using public or what appears to be public data. Sometimes, the argument is “fair use,” and that anybody could copy or mimic a writer’s or artist’s style. This is in contrast to repeated statements by AI leaders that their AI labs have exhausted the publicly available data on the internet. Irrespective of the justification, are we experiencing a growing indifference that this is just the way things are?

As if that wasn’t enough, a few days after the story in The Atlantic, OpenAI released an update to its image-generation feature within ChatGPT. Users generated images in the style of Japanese Anime animation firm Studio Ghibli with incredible precision. Influencers quickly encouraged their followers to create images in the same style to test ChatGPT’s new capabilities.

A day later, a public reckoning followed that these images could only be created because OpenAI’s model had been fed with enough examples of this particular style–without compensating the original creators of Studio Ghibli. Has ChatGPT diluted the value of Studio Ghibli movies–or has it led to its renewed popularity? Is that even a valid question, given the circumstances?

Although not related to pirated data, X (fka Twitter) was in the news a few days after OpenAI. Elon Musk, X’s CEO, has sold the company to xAI, which develops the Generative AI model Grok and which he owns too. This creates a flywheel effect that few other companies have been able to build: data > AI > platform. Meta (fka Facebook) is the only other company doing it publicly at a similar scale.

What does all of that mean? Data is the most important asset for any impactful AI system. But it’s not just big tech that is looking to data as the new oil.

The Underestimated Value of Business Data

Figuratively speaking, you, too, are sitting on an oil field of data—your company’s business data. It’s the kind of data that AI labs cannot access so easily (if at all). From process descriptions to plans, instructions, contracts, material data, suppliers, customer data, and more. It’s the codified knowledge of what makes your business unique and what gives it its competitive edge.

But unless you can make that data usable, it’s just bits and bytes. If xAI and Meta recognize the value of their business data, so should you and the leaders in your organization.

There’s an added benefit that no one is talking about, though. Unlike consumer-centric platforms like social media, your transactional business data is factually accurate simply—it has to be. Financial data has to be 100% accurate 100% of the time (not just sometimes). HR data, salary data, product data and specs, supply chain data, procurement data—you name it—it all has to be accurate.

Last week, I spoke with 50+ data leaders at multiple events with DAMA (Data Management Association) chapters in Philadelphia, Austin, and the Bay Area. When the industry focuses on AI and AI agents, data often seems like an afterthought—and that’s a problem because data is the foundation for AI projects that drive tangible business value.

Every AI project is automatically also a data project. While it might be tough to get funding for a data management or data governance project, it will be easier to do parts of that work as a “step 0” of an AI project.

There’s a lot more nuance to these topics, be it using training data without remuneration or an AI company acquiring a social media platform that uses its technology: ethics, influence, media, etc.—I’d love to hear your perspective.

Explore related articles

Become an AI Leader

Join my bi-weekly live stream and podcast for leaders and hands-on practitioners. Each episode features a different guest who shares their AI journey and actionable insights. Learn from your peers how you can lead artificial intelligence, agentic AI, generative AI & automation in business with confidence.

Join us live

April 08 (members-only) - Maxim Ioffe (Head of Automation at WESCO Distribution) will discuss setting AI governance programs between IT and the business.

April 15 - Matt Lewis (Chief Augmented Intelligence Officer of LLMental.ai) will share how CEOs can lead with AI. [More details soon…]

May 28 (members-only) - Barr Moses (CEO & Co-Founder of Monte Carlo) will provide insights into having reliable data for AI and Agentic AI projects.

Watch the latest episodes or listen to the podcast

Follow me on LinkedIn for daily posts about how you can lead AI in business with confidence. Activate notifications (🔔) and never miss an update.

Together, let’s turn hype into outcome. 👍🏻

—Andreas

The Atlantic. Search LibGen, the Pirated-Books Database That Meta Used to Train AI. March 20, 2025. https://www.theatlantic.com/technology/archive/2025/03/search-libgen-data-set/682094/