Generative AI's Culture Effect: Imposing American Worldviews And Overcorrecting Output

Why Generative AI Models Are As Biased As We Are And How To Address It

It wouldn’t be the first time.

American culture and pop culture have influenced societies globally for decades. From music to movies, brands, and language. (“Cool, huh?”) But it pales in comparison to what is yet to come — and what is already here, thanks to Generative AI-driven language and image generation.

Why is that a problem? Let’s dig in…

It All Starts With Data

It’s a known fact: Data contains bias. That’s because we have biases ourselves that are captured in data, including the preferences every one of us has, our values, and our world views. You’ll likely see at least one infographic about conscious and unconscious biases in your social media feed on any given day. Multiply these individual biases by any number of people in a group, and you have a data set that is easily skewed toward certain preferences. The foundations of statistics come to mind — population size, sample size, and statistical significance.

Last year, The Washington Post1 attempted to estimate the training data and sources of OpenAI’s GPT models. It’s pretty clear, it’s data from all corners of the internet — and that includes not just the pretty ones. (Hello, X and Reddit.) In practical terms, if you use more data from Western, English-speaking, U.S.-American sources and users, you are more likely to detect and repeat patterns that represent that specific culture and worldview.

Some might say representing that Western worldview is a wonderful thing. And it is — especially if you see yourself represented. But what if you don’t? If that same model is used in non-American and non-Western societies, the inherent biases and preferences encoded in these models are also imposed on other cultures.

Let's ask image generator Midjourney to generate a “Portrait of a business leader.” → The result: pretty male, pretty Caucasian, and of a certain age.

From Oversampling To Overcorrecting

Three common ways that data scientists address bias in data sets for traditional machine learning projects are pre-filtering data, oversampling (to increase the representation of otherwise underrepresented groups), or using synthetic data. If access to more diverse data is not possible or if there is just insufficient data available to mitigate bias, additional methods are needed beyond the statistical distribution within the model itself.

In the case of text and image generation, this could mean augmenting the prompt with additional information to compensate for the low degree of diversity. Developers might instruct the model to generate output within certain guardrails or with more diversity than the model contains out of the box.

Let’s augment the previous Midjourney prompt with “Diverse ethnic backgrounds.“ → The result: Indeed, more ethnic diversity.

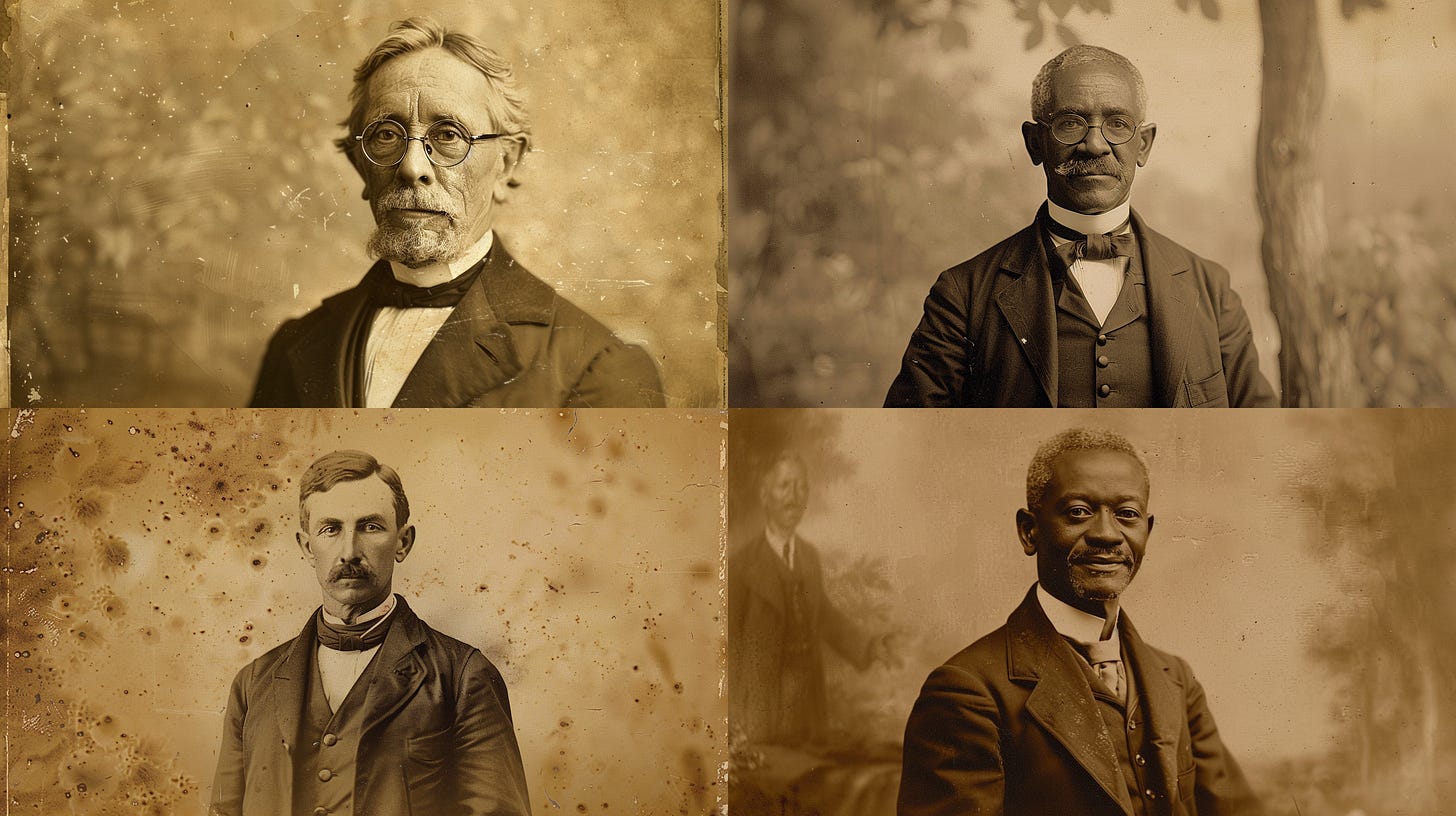

I had to try much harder for Midjourney to generate an image that includes diversity and is historically incorrect (e.g. “Sepia photo.”, “Taken in 1800.”).

→ The result: Even historically inaccurate depictions are possible at the user’s request.

Let’s assume I added this information to every image generation prompt I submit. Augmenting the (system) prompt isn’t without its challenges. It can lead to overcorrecting.

In the recent case of Google Gemini, it appears that the prompt to introduce additional diversity into the generated output has been applied to generating an image of any person. That is likely how historically incorrect depictions such as “U.S. senators in the 1800s”, “U.S. Founding Fathers“, and “popes“ have been generated with diversity where there has been none. But what does a way forward look like?

Three Ways Forward

The motivation for amplifying diversity is between noble and economically necessary for a globally used AI product. The problem isn’t so much that models create historically incorrect images but do so without users explicitly asking for it. The recent coverage of Google Gemini’s shortcomings does a good job of pointing out the issues. But it has its own shortcomings when it comes to proposing solutions.

Here are three possible scenarios for mitigating issues of overcorrection:

Defining system prompts instructing the model to generate more diverse images than it otherwise would is the best option (for now or even permanently). Add additional guardrails not to include the diversity booster for historic events or scenes.

→ This approach could fall short of the market’s expectations and continuously be challenged by people’s imagination to break it.Gathering significantly more data from currently underrepresented groups in the real world to balance out overrepresentation.

→ This approach could face economic challenges, being neither easy nor cheap to pull off.Generating more training data in the lab (aka synthetic data) could be another option to mitigate the economic challenges of scenario #2.

→ This approach would need sufficient examples for generating additional ones. But that can already be a bottleneck.

Whichever options providers choose, the situation serves as a key reminder for AI leaders and developers about the effects of bias and of attempting to introduce more diversity than there is in the underlying data. For humans to leverage AI’s full potential, AI products must be inclusive, not cater to just the majority.

A phrase frequently in tech media is: “We are running out of human-created data to train Generative AI models!”

I wonder: Are we really? Or do we actually just need more diverse data?

Explore related articles

Become an AI Leader

Join my bi-weekly live stream and podcast for leaders and hands-on practitioners. Each episode features a different guest who shares their AI journey and actionable insights. Learn from your peers how you can lead artificial intelligence, generative AI & automation in business with confidence.

Join us live

February 27 - Carly Taylor, Director of Franchise Security Strategy at Activision/ Call of Duty, will discuss how you can strengthen your security against Generative AI hackers.

March 05 - Anthony Alcaraz, AI Expert, will join and share how you can extend your Retrieval-Augmented Generation application with a knowledge graph.

March 19 - Sadie St. Lawrence, Founder of Women in Data. [More details soon…]

April 02 - Bernard Marr, Futurist & Author, will discuss how leaders can prepare for converging technologies and AI.

Watch the latest episodes or listen to the podcast

Follow me on LinkedIn for daily posts about how you can lead AI in business with confidence. Activate notifications (🔔) and never miss an update.

Together, let’s turn HYPE into OUTCOME. 👍🏻

—Andreas

The Washington Post. Inside the secret list of websites that make AI like ChatGPT sound smart. Published: 19 April 2023. Last accessed: 26 February 2024. https://www.washingtonpost.com/technology/interactive/2023/ai-chatbot-learning/