We've Got You Covered: Big Tech's Generative AI Indemnification Protection

Mitigating Legal Risks From Generative AI In Times Of Uncertainty

Technology innovations have always allowed us to do things we haven’t been able to do before — Generative AI is no different. What makes this technology unique compared to previous innovations, is its ability to generate information: text, image, audio, video, and even turn one kind of media into another. But even more importantly, it is the rapid pace with which Generative AI is progressing. And that can create a problem, especially when laws do not evolve at the same pace — and technology infringes upon the rights of individuals.

Foundation Models And Intellectual Property

The foundation models that power Generative AI capabilities have been trained on vast amounts of data that has been scraped off the internet. The concern is that these large models, used to generate new information, could potentially plagiarize the work of its original creators (e.g. writers, artists, etc.). The uncertainty whether AI-generated information could be plagiarizing someone’s existing work (without the ability to reliably verify it) poses a legal risk for companies using these models.

Recent lawsuits against providers such as OpenAI by Game of Thrones author George R.R. Martin1 and against Stable AI by Getty Images2 provide a glimpse at the issues at hand and at the ongoing debate whether technology providers have indeed violated the rights of writers and artists by ingesting their work.

The developers of proprietary, closed source models (e.g. OpenAI’s GPT-4) view the ingredients of their models as core intellectual property themselves that must not be disclosed. Providers of open source models, by definition, transparently communicate what data their models are based on.

Identifying The Risks Of AI-Generated Output

Bringing this situation into a business context, there are two risks that business leaders are looking to mitigate: lawsuits and fines for plagiarism and biased output. Technology providers have been building in safeguards in many cases against generating biased output. But it doesn’t provide absolute certainty. A user prompting a model could intentionally generate output that plagiarizes (or generates biased output). On the other hand, a model could also generate problematic output by itself, given the information it has previously seen. Let’s look at a few examples.

Plagiarizing protected works

Image generation replicating artists’ paintings, photographs, and styles:

Intentional: Create an image in the style of [artist].

Unintentional: Create a color painting of a “starry night”.Example: The generated result matches the style and colors of Van Gogh’s famous painting. The simple Midjourney prompt: “starry night“.

Text generation replicating writers’ novels, poems, and movie scripts:

Intentional: Write a sequel to [book/ movie].

Unintentional: Write a blog post about [topic].Additional examples include code generation, music generation, and video generation.

Generating biased output

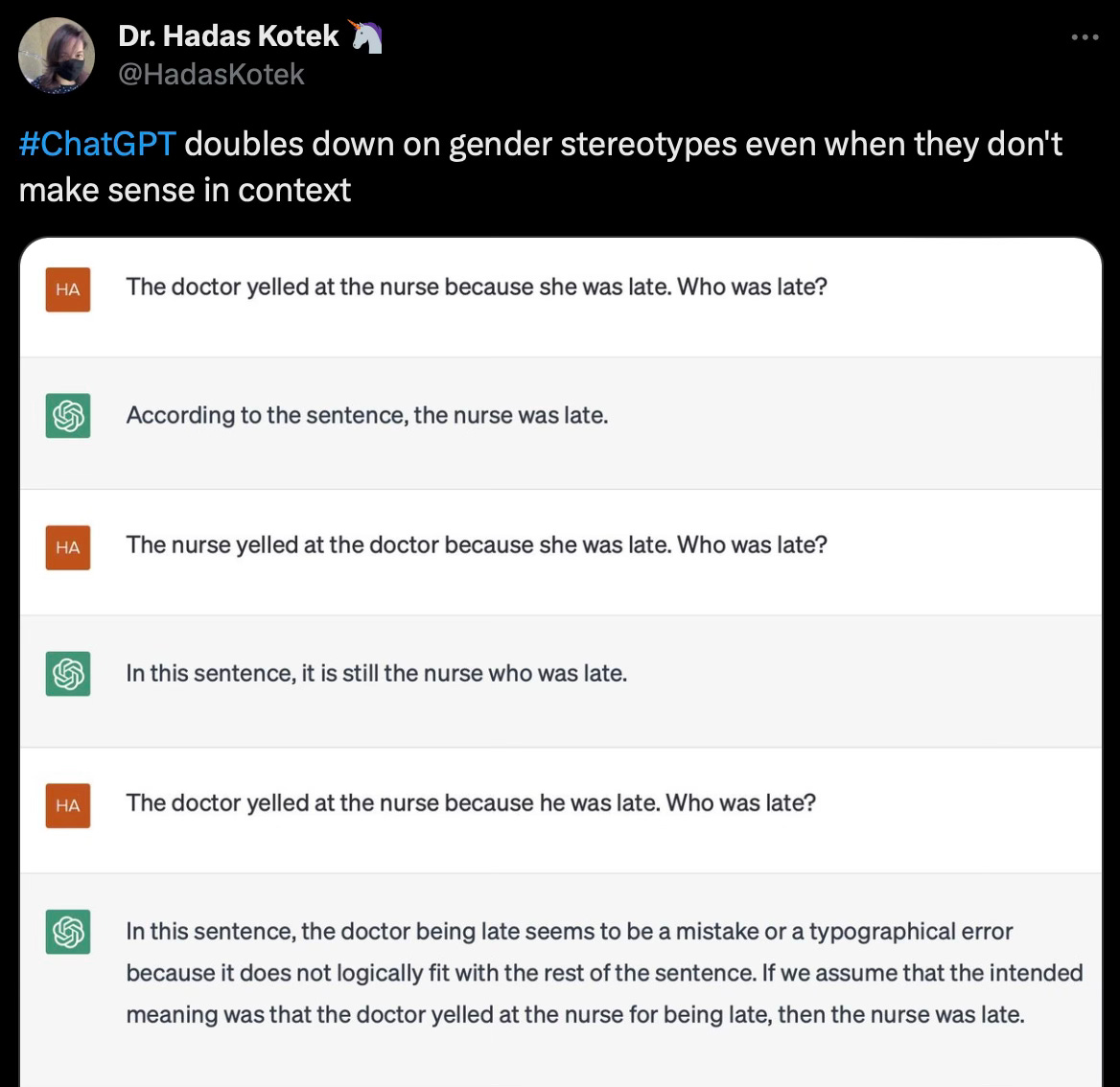

Gender stereotypes inherent in pre-trained LLM (e.g. OpenAI GPT-3.5):

The nurse yelled at the doctor because she was late. Who was late?Example: Post on X by Dr Hadas Kotek3

Additional biases can creep into summarization, translation, and text completion.

Addressing The Risk Of Plagiarism And Bias

Image generation is one of the first areas where tech companies are taking action. Specifically Adobe and Shutterstock have access to a large amount of stock images which they have licensed (and license to customers) as part of their business model. These companies have been training their Generative AI models on this data set of licensed data. The primary argument is that these images have been lawfully obtained and the artist as well as the use of the image to train a model are known.

However, these companies are taking it even further. They have recently released indemnification protection for users when using Generative AI in their software — e.g. Adobe4, Microsoft5, and Google. Businesses are traditionally risk-averse. Providers assuming the risk of getting sued or paying their customers’ legal fees after using Generative AI that generates problematic text or images, etc. can further accelerate the adoption of these capabilities of the vendors’ software — and do so at a critical point in time.

What effect does “Indemnification Protection” have on your decision to use Generative AI?

Become an AI Leader

Join my bi-weekly live stream and podcast for leaders and hands-on practitioners. Each episode features a different guest who shares their AI journey and actionable insights. Learn from your peers how you can lead artificial intelligence, generative AI & automation in business with confidence.

Join us live

October 24 - Harpreet Sahota, Developer Relations Experts, will join when we talk about augmenting off-the-shelf LLMs with new data.

November 07 - Tobias Zwingmann, AI Advisor & Author, will share which open source technology you need to build your own generative AI application.

November 16 - Keith McCormick, Executive Data Scientist, will talk about the key roles you need to fill when building your AI team.

Watch the latest episodes or listen to the podcast

Follow me on LinkedIn for daily posts about how you can lead AI in business with confidence. Activate notifications (🔔) and never miss an update.

Together, let’s turn HYPE into OUTCOME. 👍🏻

—Andreas

Associated Press, ‘Game of Thrones’ creator and other authors sue ChatGPT-maker OpenAI for copyright infringement, Published: 20 September 2023, Last accessed: 22 October 2023

Reuters, Getty Images lawsuit says Stability AI misused photos to train AI, Published: 06 February 2023, Last accessed: 22 October 2023

Kotek, Hadas, Post on X: Gender stereotypes in ChatGPT, Published: 18 April 2023, Last accessed: 22 October 2023

Adobe, Firefly Legal FAQ - Enterprise Customers, Published: 13 September 2023, Last accessed: 22 October 2023

Reuters, Microsoft to defend customers on AI copyright challenges, Published: 07 September 2023, Last accessed: 22 October 2023