An Agent For Every Person: Why Just One Won’t Do

It Won’t Be Long Before You’ll Actually Hear Someone Proclaim It

Back in the summer of 2016, I worked on a storyline for a visionary video. We called it “A Future With Intelligent Agents”. Machine learning had just started to make its way onto the world stage. Voice assistants like Amazon Alexa were new and promising. The story we envisioned was bigger than the tech in your living room: Imagine a world where there’s an intelligence in your systems that alerts you proactively, that uncovers and selects the best options for you, and that even implements decisions on your behalf.

Based on our story, the intelligent agent would order products from your supplier before you ran out and make decisions from which alternative supplier (who has sufficient items in stock) to source it. And on top of it all, negotiate the best price on your behalf. What seemed like far into the distant future then, seems a lot closer and within reach now.

We’ve seen this level of excitement before in the tech industry. And it won’t be long before we’ll see it again: bold proclamations like “A computer on every desk, and in every home.” (Bill Gates, CEO of Microsoft, 1980s) or building on that quote “A robot for every person.” (Daniel Dines, CEO of UiPath, 2018). The next quote is already written. It’s just a matter of who will be the first one to go on record to say: “An agent for every person.”

The Idea Of Agency

Large Language Models (LLM) have been trained on vast amounts of text from the internet and can generate output based on that information. To stay with text as an example, common tasks include generating/ summarizing/ translating it. But text is not just limited to language that you and I communicate in; it also includes communicating with systems and applications through software code. And that’s where it gets interesting.

This year has brought us attempts at extending LLMs to access information the web or to chat with a document. And even beyond that, early frameworks1 have emerged that let a user define what an AI-powered application should accomplish without explicitly stating how it should accomplish it. The application executes tasks on behalf of a user without the user explicitly specifying how the agent should reach its goal. The application figures out these steps on its own, using an LLM to:

understand the user’s intent,

translate it into logical steps,

ask the LLM further questions where knowledge is imperfect,

identify APIs that fulfill these steps,

write code to accomplish the user’s objective, and

execute that code.

At their recent DevDay, OpenAI unveiled a step in the direction of creating agents by enabling anyone to create a GPT, the precursor to a Generative AI-powered agent. Let’s look at the definition to understand what that even means:

a•gen•cy: the capacity of an actor to act in a given environment.

Agents are not something that’s particularly new in AI. Techniques like reinforcement learning have been used to train models (agents) how to act in a given environment while optimizing the reward they receive for the optimal choice. At the same time, agents aren’t the only type of AI-driven user assistance that we know.

From AI-Powered Assistance To Agents

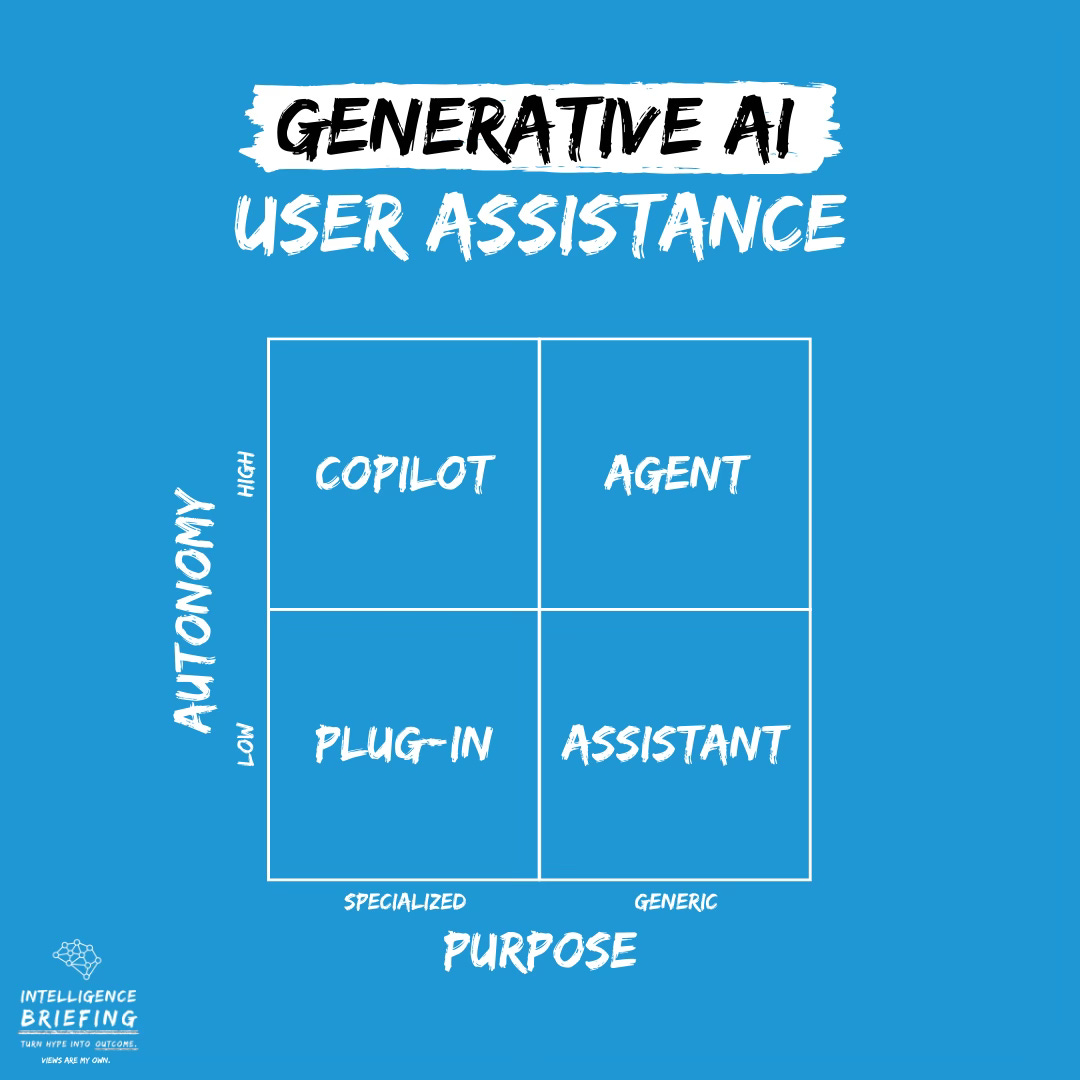

There are at least 4 terms that are frequently used when describing an AI application that can act on a user’s behalf: plug-ins, assistants, copilots, and agents. Which is which and what they can do can get confusing pretty quickly. We can categorize them along their purpose (specialized/ generic) and level of autonomy (low/ high):

Plug-in: Extend an LLM with additional functionality for a narrowly defined task

Example: Gather flight information from a travel site based on user input.Assistant: Support a user’s task in the background

Example: Provide feedback and corrections on writing, incl. style, tone, syntax.Copilot: Execute tasks on the user’s behalf in a single domain

Example: Generate code for a Java application based on user input.Agent: Complete a user’s objective, even if it is just loosely-defined

Example: Source product from a list of suppliers based on defined parameters.

The idea of having an agent is particularly exciting in business, because the conditions of a process may vary and there’s much at stake (e.g. money or reputation). Imagine having an AI-driven feature in your procurement application that helps you maintain the optimal level of inventory and that replenishes your inventory at optimal cost. Via its configuration, your company has already given the agent a few boundary conditions within which it should act, e.g. vendor list, ESG criteria, budget range, and quality requirements.

Once the goal has been submitted, the agent is able to craft a Request for Proposal (RfP), identify your top suppliers, connect with them via APIs, negotiate the price, and select the alternative supplier. The agent can reach an outcome that is beneficial to both parties while acknowledging the criteria, such as ESG. If needed, the agent can ask the user confirming questions and use the output as input for the LLM.

Why One Agent Just Won’t Do

Modern software relies on Application Programming Interfaces (APIs) to query/ submit information or execute a task. AI-powered applications can already identify required APIs on their own and write and execute code to invoke a desired action. Applying the same concepts to other APIs (such as agents) will be the next step from assistants to agents — one we can already anticipate.

Even if they are able to solve more general and complex tasks, using just one agent will likely not be sufficient given the variety of tasks and their complexity. But one can envision a network of agents that act to accomplish a task and subtask, each triggering another one in the process to achieve the uber-goal that the user has set.

What AI leaders in business need to prepare for are two things:

How can you tap into this growing ecosystem of AI agents?

How can you manage and contain uncontrolled development and use of AI agents that could expose sensitive company data?

Become an AI Leader

Join my bi-weekly live stream and podcast for leaders and hands-on practitioners. Each episode features a different guest who shares their AI journey and actionable insights. Learn from your peers how you can lead artificial intelligence, generative AI & automation in business with confidence.

Join us live

November 07 - Tobias Zwingmann, AI Advisor & Author, will share which open source technology you need to build your own generative AI application.

November 16 - Keith McCormick, Executive Data Scientist, will talk about the key roles you need to fill when building your AI team.

December 05 - Mark Stouse, CEO Proof Analytics, will discuss how managers can teach their data scientists about the business.

Watch the latest episodes or listen to the podcast

Follow me on LinkedIn for daily posts about how you can lead AI in business with confidence. Activate notifications (🔔) and never miss an update.

Together, let’s turn HYPE into OUTCOME. 👍🏻

—Andreas